Introduction

Welcome to The Embedded Rust Book: An introductory book about using the Rust Programming Language on "Bare Metal" embedded systems, such as Microcontrollers.

Who Embedded Rust is For

Embedded Rust is for everyone who wants to do embedded programming while taking advantage of the higher-level concepts and safety guarantees the Rust language provides. (See also Who Rust Is For)

Scope

The goals of this book are:

-

Get developers up to speed with embedded Rust development. i.e. How to set up a development environment.

-

Share current best practices about using Rust for embedded development. i.e. How to best use Rust language features to write more correct embedded software.

-

Serve as a cookbook in some cases. e.g. How do I mix C and Rust in a single project?

This book tries to be as general as possible but to make things easier for both the readers and the writers it uses the ARM Cortex-M architecture in all its examples. However, the book doesn't assume that the reader is familiar with this particular architecture and explains details particular to this architecture where required.

Who This Book is For

This book caters towards people with either some embedded background or some Rust background, however we believe everybody curious about embedded Rust programming can get something out of this book. For those without any prior knowledge we suggest you read the "Assumptions and Prerequisites" section and catch up on missing knowledge to get more out of the book and improve your reading experience. You can check out the "Other Resources" section to find resources on topics you might want to catch up on.

Assumptions and Prerequisites

- You are comfortable using the Rust Programming Language, and have written, run, and debugged Rust applications on a desktop environment. You should also be familiar with the idioms of the 2018 edition as this book targets Rust 2018.

- You are comfortable developing and debugging embedded systems in another

language such as C, C++, or Ada, and are familiar with concepts such as:

- Cross Compilation

- Memory Mapped Peripherals

- Interrupts

- Common interfaces such as I2C, SPI, Serial, etc.

Other Resources

If you are unfamiliar with anything mentioned above or if you want more information about a specific topic mentioned in this book you might find some of these resources helpful.

| Topic | Resource | Description |

|---|---|---|

| Rust | Rust Book | If you are not yet comfortable with Rust, we highly suggest reading this book. |

| Rust, Embedded | Discovery Book | If you have never done any embedded programming, this book might be a better start |

| Rust, Embedded | Embedded Rust Bookshelf | Here you can find several other resources provided by Rust's Embedded Working Group. |

| Rust, Embedded | Embedonomicon | The nitty gritty details when doing embedded programming in Rust. |

| Rust, Embedded | embedded FAQ | Frequently asked questions about Rust in an embedded context. |

| Rust, Embedded | Comprehensive Rust 🦀: Bare Metal | Teaching material for a 1-day class on bare-metal Rust development |

| Interrupts | Interrupt | - |

| Memory-mapped IO/Peripherals | Memory-mapped I/O | - |

| SPI, UART, RS232, USB, I2C, TTL | Stack Exchange about SPI, UART, and other interfaces | - |

Translations

This book has been translated by generous volunteers. If you would like your translation listed here, please open a PR to add it.

How to Use This Book

This book generally assumes that you’re reading it front-to-back. Later chapters build on concepts in earlier chapters, and earlier chapters may not dig into details on a topic, revisiting the topic in a later chapter.

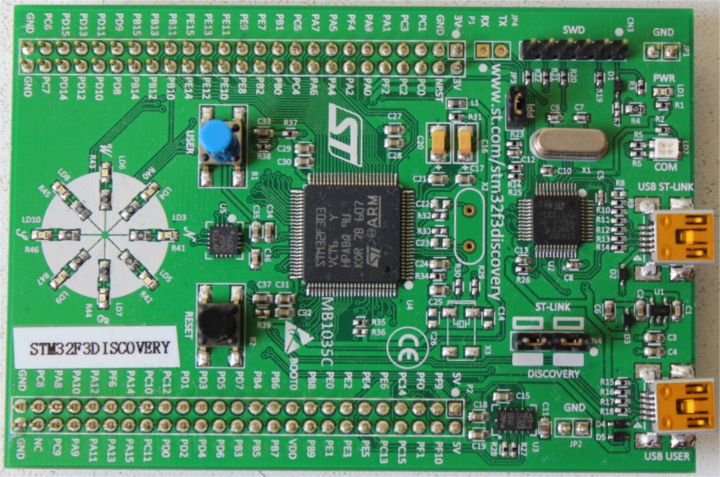

This book will be using the STM32F3DISCOVERY development board from STMicroelectronics for the majority of the examples contained within. This board is based on the ARM Cortex-M architecture, and while basic functionality is the same across most CPUs based on this architecture, peripherals and other implementation details of Microcontrollers are different between different vendors, and often even different between Microcontroller families from the same vendor.

For this reason, we suggest purchasing the STM32F3DISCOVERY development board for the purpose of following the examples in this book.

Contributing to This Book

The work on this book is coordinated in this repository and is mainly developed by the resources team.

If you have trouble following the instructions in this book or find that some section of the book is not clear enough or hard to follow then that's a bug and it should be reported in the issue tracker of this book.

Pull requests fixing typos and adding new content are very welcome!

Re-using this material

This book is distributed under the following licenses:

- The code samples and free-standing Cargo projects contained within this book are licensed under the terms of both the MIT License and the Apache License v2.0.

- The written prose, pictures and diagrams contained within this book are licensed under the terms of the Creative Commons CC-BY-SA v4.0 license.

TL;DR: If you want to use our text or images in your work, you need to:

- Give the appropriate credit (i.e. mention this book on your slide, and provide a link to the relevant page)

- Provide a link to the CC-BY-SA v4.0 licence

- Indicate if you have changed the material in any way, and make any changes to our material available under the same licence

Also, please do let us know if you find this book useful!

Meet Your Hardware

Let's get familiar with the hardware we'll be working with.

STM32F3DISCOVERY (the "F3")

What does this board contain?

-

A STM32F303VCT6 microcontroller. This microcontroller has

-

A single-core ARM Cortex-M4F processor with hardware support for single-precision floating point operations and a maximum clock frequency of 72 MHz.

-

256 KiB of "Flash" memory. (1 KiB = 1024 bytes)

-

48 KiB of RAM.

-

A variety of integrated peripherals such as timers, I2C, SPI and USART.

-

General purpose Input Output (GPIO) and other types of pins accessible through the two rows of headers along side the board.

-

A USB interface accessible through the USB port labeled "USB USER".

-

-

An accelerometer as part of the LSM303DLHC chip.

-

A magnetometer as part of the LSM303DLHC chip.

-

8 user LEDs arranged in the shape of a compass.

-

A second microcontroller: a STM32F103. This microcontroller is actually part of an on-board programmer / debugger and is connected to the USB port named "USB ST-LINK".

For a more detailed list of features and further specifications of the board take a look at the STMicroelectronics website.

A word of caution: be careful if you want to apply external signals to the board. The microcontroller STM32F303VCT6 pins take a nominal voltage of 3.3 volts. For further information consult the 6.2 Absolute maximum ratings section in the manual

A no_std Rust Environment

The term Embedded Programming is used for a wide range of different classes of programming. Ranging from programming 8-Bit MCUs (like the ST72325xx) with just a few KB of RAM and ROM, up to systems like the Raspberry Pi (Model B 3+) which has a 32/64-bit 4-core Cortex-A53 @ 1.4 GHz and 1GB of RAM. Different restrictions/limitations will apply when writing code depending on what kind of target and use case you have.

There are two general Embedded Programming classifications:

Hosted Environments

These kinds of environments are close to a normal PC environment. What this means is that you are provided with a System Interface E.G. POSIX that provides you with primitives to interact with various systems, such as file systems, networking, memory management, threads, etc. Standard libraries in turn usually depend on these primitives to implement their functionality. You may also have some sort of sysroot and restrictions on RAM/ROM-usage, and perhaps some special HW or I/Os. Overall it feels like coding on a special-purpose PC environment.

Bare Metal Environments

In a bare metal environment no code has been loaded before your program.

Without the software provided by an OS we can not load the standard library.

Instead the program, along with the crates it uses, can only use the hardware (bare metal) to run.

To prevent rust from loading the standard library use no_std.

The platform-agnostic parts of the standard library are available through libcore.

libcore also excludes things which are not always desirable in an embedded environment.

One of these things is a memory allocator for dynamic memory allocation.

If you require this or any other functionalities there are often crates which provide these.

The libstd Runtime

As mentioned before using libstd requires some sort of system integration, but this is not only because

libstd is just providing a common way of accessing OS abstractions, it also provides a runtime.

This runtime, among other things, takes care of setting up stack overflow protection, processing command line arguments,

and spawning the main thread before a program's main function is invoked. This runtime also won't be available in a no_std environment.

Summary

#![no_std] is a crate-level attribute that indicates that the crate will link to the core-crate instead of the std-crate.

The libcore crate in turn is a platform-agnostic subset of the std crate

which makes no assumptions about the system the program will run on.

As such, it provides APIs for language primitives like floats, strings and slices, as well as APIs that expose processor features

like atomic operations and SIMD instructions. However it lacks APIs for anything that involves platform integration.

Because of these properties no_std and libcore code can be used for any kind of

bootstrapping (stage 0) code like bootloaders, firmware or kernels.

Overview

| feature | no_std | std |

|---|---|---|

| heap (dynamic memory) | * | ✓ |

| collections (Vec, BTreeMap, etc) | ** | ✓ |

| stack overflow protection | ✘ | ✓ |

| runs init code before main | ✘ | ✓ |

| libstd available | ✘ | ✓ |

| libcore available | ✓ | ✓ |

| writing firmware, kernel, or bootloader code | ✓ | ✘ |

* Only if you use the alloc crate and use a suitable allocator like alloc-cortex-m.

** Only if you use the collections crate and configure a global default allocator.

** HashMap and HashSet are not available due to a lack of a secure random number generator.

See Also

Tooling

Dealing with microcontrollers involves using several different tools as we'll be dealing with an architecture different than your laptop's and we'll have to run and debug programs on a remote device.

We'll use all the tools listed below. Any recent version should work when a minimum version is not specified, but we have listed the versions we have tested.

- Rust 1.31, 1.31-beta, or a newer toolchain PLUS ARM Cortex-M compilation support.

cargo-binutils~0.1.4qemu-system-arm. Tested versions: 3.0.0- OpenOCD >=0.8. Tested versions: v0.9.0 and v0.10.0

- GDB with ARM support. Version 7.12 or newer highly recommended. Tested versions: 7.10, 7.11, 7.12 and 8.1

cargo-generateorgit. These tools are optional but will make it easier to follow along with the book.

The text below explains why we are using these tools. Installation instructions can be found on the next page.

cargo-generate OR git

Bare metal programs are non-standard (no_std) Rust programs that require some

adjustments to the linking process in order to get the memory layout of the program

right. This requires some additional files (like linker scripts) and

settings (like linker flags). We have packaged those for you in a template

such that you only need to fill in the missing information (such as the project name and the

characteristics of your target hardware).

Our template is compatible with cargo-generate: a Cargo subcommand for

creating new Cargo projects from templates. You can also download the

template using git, curl, wget, or your web browser.

cargo-binutils

cargo-binutils is a collection of Cargo subcommands that make it easy to use

the LLVM tools that are shipped with the Rust toolchain. These tools include the

LLVM versions of objdump, nm and size and are used for inspecting

binaries.

The advantage of using these tools over GNU binutils is that (a) installing the

LLVM tools is the same one-command installation (rustup component add llvm-tools) regardless of your OS and (b) tools like objdump support

all the architectures that rustc supports -- from ARM to x86_64 -- because

they both share the same LLVM backend.

qemu-system-arm

QEMU is an emulator. In this case we use the variant that can fully emulate ARM systems. We use QEMU to run embedded programs on the host. Thanks to this you can follow some parts of this book even if you don't have any hardware with you!

Tooling for Embedded Rust Debugging

Overview

Debugging embedded systems in Rust requires specialized tools including software to manage the debugging process, debuggers to inspect and control program execution, and hardware probes to facilitate interaction between the host and the embedded device. This document outlines essential software tools like Probe-rs and OpenOCD, which simplify and support the debugging process, alongside prominent debuggers such as GDB and the Probe-rs Visual Studio Code extension. Additionally, it covers key hardware probes such as Rusty-probe, ST-Link, J-Link, and MCU-Link, which are integral for effective debugging and programming of embedded devices.

Software that drives debugging tools

Probe-rs

Probe-rs is a modern, Rust-focused software designed to work with debuggers in embedded systems. Unlike OpenOCD, Probe-rs is built with simplicity in mind and aims to reduce the configuration burden often found in other debugging solutions. It supports various probes and targets, providing a high-level interface for interacting with embedded hardware. Probe-rs integrates directly with Rust tooling, and integrates with Visual Studio Code through its extension, allowing developers to streamline their debugging workflow.

OpenOCD (Open On-Chip Debugger)

OpenOCD is an open-source software tool used for debugging, testing, and programming embedded systems. It provides an interface between the host system and embedded hardware, supporting various transport layers like JTAG and SWD (Serial Wire Debug). OpenOCD integrates with GDB, which is a debugger. OpenOCD is widely supported, with extensive documentation and a large community, but may require complex configuration, especially for custom embedded setups.

Debuggers

A debugger allows developers to inspect and control the execution of a program in order to identify and correct errors or bugs. It provides functionalities such as setting breakpoints, stepping through code line by line, and examining the values of variables and memory states. Debuggers are essential for thorough software development and maintenance, enabling developers to ensure that their code behaves as intended under various conditions.

Debuggers know how to:

- Interact with the memory mapped registers.

- Set Breakpoints/Watchpoints.

- Read and write to the memory mapped registers.

- Detect when the MCU has been halted for a debug event.

- Continue MCU execution after a debug event has been encountered.

- Erase and write to the microcontroller's FLASH.

Probe-rs Visual Studio Code Extension

Probe-rs has a Visual Studio Code extension, providing a seamless debugging experience without extensive setup. Through this connection, developers can use Rust-specific features like pretty printing and detailed error messages, ensuring that their debugging process aligns with the Rust ecosystem.

GDB (GNU Debugger)

GDB is a versatile debugging tool that allows developers to examine the state of programs while they run or after they crash. For embedded Rust, GDB connects to the target system via OpenOCD or other debugging servers to interact with the embedded code. GDB is highly configurable and supports features like remote debugging, variable inspection, and conditional breakpoints. It can be used on a variety of platforms, and has extensive support for Rust-specific debugging needs, such as pretty printing and integration with IDEs.

Probes

A hardware probe is a device used in the development and debugging of embedded systems to facilitate communication between a host computer and the target embedded device. It typically supports protocols like JTAG or SWD, enabling it to program, debug, and analyze the microcontroller or microprocessor on the embedded system. Hardware probes are crucial for developers to set breakpoints, step through code, and inspect memory and processor registers, effectively allowing them to diagnose and fix issues in real-time.

Rusty-probe

Rusty-probe is an open-sourced USB-based hardware debugging probe designed to work with probe-rs. The combination of Rusty-Probe and probe-rs provides an easy-to-use, cost-effective solution for developers working with embedded Rust applications.

ST-Link

The ST-Link is a popular debugging and programming probe developed by STMicroelectronics primarily for their STM32 and STM8 microcontroller series. It supports both debugging and programming via JTAG or SWD (Serial Wire Debug) interfaces. ST-Link is widely used due to its direct support from STMicroelectronics' extensive range of development boards and its integration into major IDEs, making it a convenient choice for developers working with STM microcontrollers.

J-Link

J-Link, developed by SEGGER Microcontroller, is a robust and versatile debugger supporting a wide range of CPU cores and devices beyond just ARM, such as RISC-V. Known for its high performance and reliability, J-Link supports various communication interfaces, including JTAG, SWD, and fine-pitch JTAG interfaces. It is favored for its advanced features like unlimited breakpoints in flash memory and its compatibility with a multitude of development environments.

MCU-Link

MCU-Link is a debugging probe that also functions as a programmer, provided by NXP Semiconductors. It supports a variety of ARM Cortex microcontrollers and interfaces seamlessly with development tools like MCUXpresso IDE. MCU-Link is particularly notable for its versatility and affordability, making it an accessible option for hobbyists, educators, and professional developers alike.

Installing the tools

This page contains OS-agnostic installation instructions for a few of the tools:

Rust Toolchain

Install rustup by following the instructions at https://rustup.rs.

NOTE Make sure you have a compiler version equal to or newer than 1.31. rustc -V should return a date newer than the one shown below.

$ rustc -V

rustc 1.31.1 (b6c32da9b 2018-12-18)

For bandwidth and disk usage concerns the default installation only supports

native compilation. To add cross compilation support for the ARM Cortex-M

architectures choose one of the following compilation targets. For the STM32F3DISCOVERY

board used for the examples in this book, use the thumbv7em-none-eabihf target.

Find the best Cortex-M for you.

Cortex-M0, M0+, and M1 (ARMv6-M architecture):

rustup target add thumbv6m-none-eabi

Cortex-M3 (ARMv7-M architecture):

rustup target add thumbv7m-none-eabi

Cortex-M4 and M7 without hardware floating point (ARMv7E-M architecture):

rustup target add thumbv7em-none-eabi

Cortex-M4F and M7F with hardware floating point (ARMv7E-M architecture):

rustup target add thumbv7em-none-eabihf

Cortex-M23 (ARMv8-M architecture):

rustup target add thumbv8m.base-none-eabi

Cortex-M33 and M35P (ARMv8-M architecture):

rustup target add thumbv8m.main-none-eabi

Cortex-M33F and M35PF with hardware floating point (ARMv8-M architecture):

rustup target add thumbv8m.main-none-eabihf

cargo-binutils

cargo install cargo-binutils

rustup component add llvm-tools

WINDOWS: prerequisite C++ Build Tools for Visual Studio 2019 is installed. https://visualstudio.microsoft.com/thank-you-downloading-visual-studio/?sku=BuildTools&rel=16

cargo-generate

We'll use this later to generate a project from a template.

cargo install cargo-generate

Note: on some Linux distros (e.g. Ubuntu) you may need to install the packages libssl-dev and pkg-config prior to installing cargo-generate.

OS-Specific Instructions

Now follow the instructions specific to the OS you are using:

Linux

Here are the installation commands for a few Linux distributions.

Packages

- Ubuntu 18.04 or newer / Debian stretch or newer

NOTE

gdb-multiarchis the GDB command you'll use to debug your ARM Cortex-M programs

sudo apt install gdb-multiarch openocd qemu-system-arm

- Ubuntu 14.04 and 16.04

NOTE

arm-none-eabi-gdbis the GDB command you'll use to debug your ARM Cortex-M programs

sudo apt install gdb-arm-none-eabi openocd qemu-system-arm

- Fedora 27 or newer

sudo dnf install gdb openocd qemu-system-arm

- Arch Linux

NOTE

arm-none-eabi-gdbis the GDB command you'll use to debug ARM Cortex-M programs

sudo pacman -S arm-none-eabi-gdb qemu-system-arm openocd

udev rules

This rule lets you use OpenOCD with the Discovery board without root privilege.

Create the file /etc/udev/rules.d/70-st-link.rules with the contents shown below.

# STM32F3DISCOVERY rev A/B - ST-LINK/V2

ATTRS{idVendor}=="0483", ATTRS{idProduct}=="3748", TAG+="uaccess"

# STM32F3DISCOVERY rev C+ - ST-LINK/V2-1

ATTRS{idVendor}=="0483", ATTRS{idProduct}=="374b", TAG+="uaccess"

Then reload all the udev rules with:

sudo udevadm control --reload-rules

If you had the board plugged to your laptop, unplug it and then plug it again.

You can check the permissions by running this command:

lsusb

Which should show something like

(..)

Bus 001 Device 018: ID 0483:374b STMicroelectronics ST-LINK/V2.1

(..)

Take note of the bus and device numbers. Use those numbers to create a path like

/dev/bus/usb/<bus>/<device>. Then use this path like so:

ls -l /dev/bus/usb/001/018

crw-------+ 1 root root 189, 17 Sep 13 12:34 /dev/bus/usb/001/018

getfacl /dev/bus/usb/001/018 | grep user

user::rw-

user:you:rw-

The + appended to permissions indicates the existence of an extended

permission. The getfacl command tells the user you can make use of

this device.

Now, go to the next section.

macOS

All the tools can be installed using Homebrew or MacPorts:

Install tools with Homebrew

$ # GDB

$ brew install arm-none-eabi-gdb

$ # OpenOCD

$ brew install openocd

$ # QEMU

$ brew install qemu

NOTE If OpenOCD crashes you may need to install the latest version using:

$ brew install --HEAD openocd

Install tools with MacPorts

$ # GDB

$ sudo port install arm-none-eabi-gcc

$ # OpenOCD

$ sudo port install openocd

$ # QEMU

$ sudo port install qemu

That's all! Go to the next section.

Windows

arm-none-eabi-gdb

ARM provides .exe installers for Windows. Grab one from here, and follow the instructions.

Just before the installation process finishes tick/select the "Add path to environment variable"

option. Then verify that the tools are in your %PATH%:

$ arm-none-eabi-gdb -v

GNU gdb (GNU Tools for Arm Embedded Processors 7-2018-q2-update) 8.1.0.20180315-git

(..)

OpenOCD

There's no official binary release of OpenOCD for Windows but if you're not in the mood to compile

it yourself, the xPack project provides a binary distribution, here. Follow the

provided installation instructions. Then update your %PATH% environment variable to

include the path where the binaries were installed. (C:\Users\USERNAME\AppData\Roaming\xPacks\@xpack-dev-tools\openocd\0.10.0-13.1\.content\bin\,

if you've been using the easy install)

Verify that OpenOCD is in your %PATH% with:

$ openocd -v

Open On-Chip Debugger 0.10.0

(..)

QEMU

Grab QEMU from the official website.

ST-LINK USB driver

You'll also need to install this USB driver or OpenOCD won't work. Follow the installer instructions and make sure you install the right version (32-bit or 64-bit) of the driver.

That's all! Go to the next section.

Verify Installation

In this section we check that some of the required tools / drivers have been correctly installed and configured.

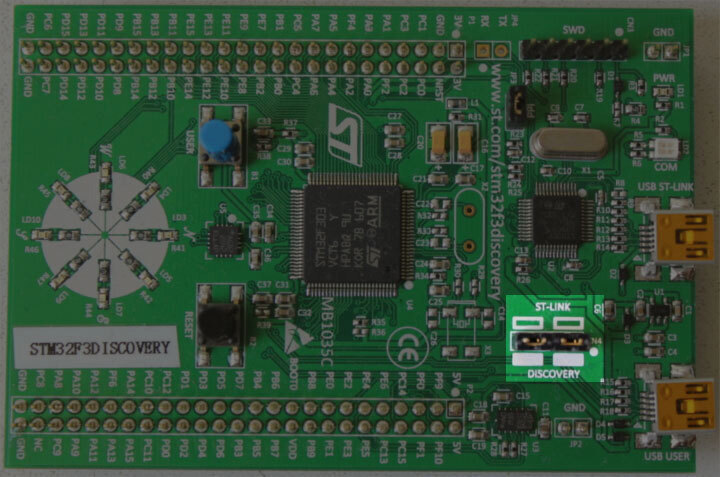

Connect your laptop / PC to the discovery board using a Mini-USB USB cable. The discovery board has two USB connectors; use the one labeled "USB ST-LINK" that sits on the center of the edge of the board.

Also check that the ST-LINK header is populated. See the picture below; the ST-LINK header is highlighted.

Now run the following command:

openocd -f interface/stlink.cfg -f target/stm32f3x.cfg

NOTE: Old versions of openocd, including the 0.10.0 release from 2017, do not contain the new (and preferable)

interface/stlink.cfgfile; instead you may need to useinterface/stlink-v2.cfgorinterface/stlink-v2-1.cfg.

You should get the following output and the program should block the console:

Open On-Chip Debugger 0.10.0

Licensed under GNU GPL v2

For bug reports, read

http://openocd.org/doc/doxygen/bugs.html

Info : auto-selecting first available session transport "hla_swd". To override use 'transport select <transport>'.

adapter speed: 1000 kHz

adapter_nsrst_delay: 100

Info : The selected transport took over low-level target control. The results might differ compared to plain JTAG/SWD

none separate

Info : Unable to match requested speed 1000 kHz, using 950 kHz

Info : Unable to match requested speed 1000 kHz, using 950 kHz

Info : clock speed 950 kHz

Info : STLINK v2 JTAG v27 API v2 SWIM v15 VID 0x0483 PID 0x374B

Info : using stlink api v2

Info : Target voltage: 2.919881

Info : stm32f3x.cpu: hardware has 6 breakpoints, 4 watchpoints

The contents may not match exactly but you should get the last line about breakpoints and watchpoints. If you got it then terminate the OpenOCD process and move to the next section.

If you didn't get the "breakpoints" line then try one of the following commands.

openocd -f interface/stlink-v2.cfg -f target/stm32f3x.cfg

openocd -f interface/stlink-v2-1.cfg -f target/stm32f3x.cfg

If one of those commands works it means you got an old hardware revision of the discovery board. That won't be a problem but commit that fact to memory as you'll need to configure things a bit differently later on. You can move to the next section.

If none of the commands work as a normal user then try to run them with root

permission (e.g. sudo openocd ..). If the commands do work with root

permission then check that the udev rules have been correctly set.

If you have reached this point and OpenOCD is not working please open an issue and we'll help you out!

Getting Started

In this section we'll walk you through the process of writing, building, flashing and debugging embedded programs. You will be able to try most of the examples without any special hardware as we will show you the basics using QEMU, a popular open-source hardware emulator. The only section where hardware is required is, naturally enough, the Hardware section, where we use OpenOCD to program an STM32F3DISCOVERY.

QEMU

We'll start writing a program for the LM3S6965, a Cortex-M3 microcontroller. We have chosen this as our initial target because it can be emulated using QEMU so you don't need to fiddle with hardware in this section and we can focus on the tooling and the development process.

IMPORTANT We'll use the name "app" for the project name in this tutorial. Whenever you see the word "app" you should replace it with the name you selected for your project. Or, you could also name your project "app" and avoid the substitutions.

Creating a non standard Rust program

We'll use the cortex-m-quickstart project template to generate a new

project from it. The created project will contain a barebone application: a good

starting point for a new embedded rust application. In addition, the project will

contain an examples directory, with several separate applications, highlighting

some of the key embedded rust functionality.

Using cargo-generate

First install cargo-generate

cargo install cargo-generate

Then generate a new project

cargo generate --git https://github.com/rust-embedded/cortex-m-quickstart

Project Name: app

Creating project called `app`...

Done! New project created /tmp/app

cd app

Using git

Clone the repository

git clone https://github.com/rust-embedded/cortex-m-quickstart app

cd app

And then fill in the placeholders in the Cargo.toml file

[package]

authors = ["{{authors}}"] # "{{authors}}" -> "John Smith"

edition = "2018"

name = "{{project-name}}" # "{{project-name}}" -> "app"

version = "0.1.0"

# ..

[[bin]]

name = "{{project-name}}" # "{{project-name}}" -> "app"

test = false

bench = false

Using neither

Grab the latest snapshot of the cortex-m-quickstart template and extract it.

curl -LO https://github.com/rust-embedded/cortex-m-quickstart/archive/master.zip

unzip master.zip

mv cortex-m-quickstart-master app

cd app

Or you can browse to cortex-m-quickstart, click the green "Clone or

download" button and then click "Download ZIP".

Then fill in the placeholders in the Cargo.toml file as done in the second

part of the "Using git" version.

Program Overview

For convenience here are the most important parts of the source code in src/main.rs:

#![no_std]

#![no_main]

use panic_halt as _;

use cortex_m_rt::entry;

#[entry]

fn main() -> ! {

loop {

// your code goes here

}

}This program is a bit different from a standard Rust program so let's take a closer look.

#![no_std] indicates that this program will not link to the standard crate,

std. Instead it will link to its subset: the core crate.

#![no_main] indicates that this program won't use the standard main

interface that most Rust programs use. The main (no pun intended) reason to go

with no_main is that using the main interface in no_std context requires

nightly.

use panic_halt as _;. This crate provides a panic_handler that defines

the panicking behavior of the program. We will cover this in more detail in the

Panicking chapter of the book.

#[entry] is an attribute provided by the cortex-m-rt crate that's used

to mark the entry point of the program. As we are not using the standard main

interface we need another way to indicate the entry point of the program and

that'd be #[entry].

fn main() -> !. Our program will be the only process running on the target

hardware so we don't want it to end! We use a divergent function (the -> !

bit in the function signature) to ensure at compile time that'll be the case.

Cross compiling

The next step is to cross compile the program for the Cortex-M3 architecture.

That's as simple as running cargo build --target $TRIPLE if you know what the

compilation target ($TRIPLE) should be. Luckily, the .cargo/config.toml in the

template has the answer:

tail -n6 .cargo/config.toml

[build]

# Pick ONE of these compilation targets

# target = "thumbv6m-none-eabi" # Cortex-M0 and Cortex-M0+

target = "thumbv7m-none-eabi" # Cortex-M3

# target = "thumbv7em-none-eabi" # Cortex-M4 and Cortex-M7 (no FPU)

# target = "thumbv7em-none-eabihf" # Cortex-M4F and Cortex-M7F (with FPU)

To cross compile for the Cortex-M3 architecture we have to use

thumbv7m-none-eabi. That target is not automatically installed when installing

the Rust toolchain, it would now be a good time to add that target to the toolchain,

if you haven't done it yet:

rustup target add thumbv7m-none-eabi

Since the thumbv7m-none-eabi compilation target has been set as the default in

your .cargo/config.toml file, the two commands below do the same:

cargo build --target thumbv7m-none-eabi

cargo build

Inspecting

Now we have a non-native ELF binary in target/thumbv7m-none-eabi/debug/app. We

can inspect it using cargo-binutils.

With cargo-readobj we can print the ELF headers to confirm that this is an ARM

binary.

cargo readobj --bin app -- --file-headers

Note that:

--bin appis sugar for inspect the binary attarget/$TRIPLE/debug/app--bin appwill also (re)compile the binary, if necessary

ELF Header:

Magic: 7f 45 4c 46 01 01 01 00 00 00 00 00 00 00 00 00

Class: ELF32

Data: 2's complement, little endian

Version: 1 (current)

OS/ABI: UNIX - System V

ABI Version: 0x0

Type: EXEC (Executable file)

Machine: ARM

Version: 0x1

Entry point address: 0x405

Start of program headers: 52 (bytes into file)

Start of section headers: 153204 (bytes into file)

Flags: 0x5000200

Size of this header: 52 (bytes)

Size of program headers: 32 (bytes)

Number of program headers: 2

Size of section headers: 40 (bytes)

Number of section headers: 19

Section header string table index: 18

cargo-size can print the size of the linker sections of the binary.

cargo size --bin app --release -- -A

we use --release to inspect the optimized version

app :

section size addr

.vector_table 1024 0x0

.text 92 0x400

.rodata 0 0x45c

.data 0 0x20000000

.bss 0 0x20000000

.debug_str 2958 0x0

.debug_loc 19 0x0

.debug_abbrev 567 0x0

.debug_info 4929 0x0

.debug_ranges 40 0x0

.debug_macinfo 1 0x0

.debug_pubnames 2035 0x0

.debug_pubtypes 1892 0x0

.ARM.attributes 46 0x0

.debug_frame 100 0x0

.debug_line 867 0x0

Total 14570

A refresher on ELF linker sections

.textcontains the program instructions.rodatacontains constant values like strings.datacontains statically allocated variables whose initial values are not zero.bssalso contains statically allocated variables whose initial values are zero.vector_tableis a non-standard section that we use to store the vector (interrupt) table.ARM.attributesand the.debug_*sections contain metadata and will not be loaded onto the target when flashing the binary.

IMPORTANT: ELF files contain metadata like debug information so their size

on disk does not accurately reflect the space the program will occupy when

flashed on a device. Always use cargo-size to check how big a binary really

is.

cargo-objdump can be used to disassemble the binary.

cargo objdump --bin app --release -- --disassemble --no-show-raw-insn --print-imm-hex

NOTE if the above command complains about

Unknown command line argumentsee the following bug report: https://github.com/rust-embedded/book/issues/269

NOTE this output can differ on your system. New versions of rustc, LLVM and libraries can generate different assembly. We truncated some of the instructions to keep the snippet small.

app: file format ELF32-arm-little

Disassembly of section .text:

main:

400: bl #0x256

404: b #-0x4 <main+0x4>

Reset:

406: bl #0x24e

40a: movw r0, #0x0

< .. truncated any more instructions .. >

DefaultHandler_:

656: b #-0x4 <DefaultHandler_>

UsageFault:

657: strb r7, [r4, #0x3]

DefaultPreInit:

658: bx lr

__pre_init:

659: strb r7, [r0, #0x1]

__nop:

65a: bx lr

HardFaultTrampoline:

65c: mrs r0, msp

660: b #-0x2 <HardFault_>

HardFault_:

662: b #-0x4 <HardFault_>

HardFault:

663: <unknown>

Running

Next, let's see how to run an embedded program on QEMU! This time we'll use the

hello example which actually does something.

For convenience here's the source code of examples/hello.rs:

//! Prints "Hello, world!" on the host console using semihosting

#![no_main]

#![no_std]

use panic_halt as _;

use cortex_m_rt::entry;

use cortex_m_semihosting::{debug, hprintln};

#[entry]

fn main() -> ! {

hprintln!("Hello, world!").unwrap();

// exit QEMU

// NOTE do not run this on hardware; it can corrupt OpenOCD state

debug::exit(debug::EXIT_SUCCESS);

loop {}

}This program uses something called semihosting to print text to the host console. When using real hardware this requires a debug session but when using QEMU this Just Works.

Let's start by compiling the example:

cargo build --example hello

The output binary will be located at

target/thumbv7m-none-eabi/debug/examples/hello.

To run this binary on QEMU run the following command:

qemu-system-arm \

-cpu cortex-m3 \

-machine lm3s6965evb \

-nographic \

-semihosting-config enable=on,target=native \

-kernel target/thumbv7m-none-eabi/debug/examples/hello

Hello, world!

The command should successfully exit (exit code = 0) after printing the text. On *nix you can check that with the following command:

echo $?

0

Let's break down that QEMU command:

-

qemu-system-arm. This is the QEMU emulator. There are a few variants of these QEMU binaries; this one does full system emulation of ARM machines hence the name. -

-cpu cortex-m3. This tells QEMU to emulate a Cortex-M3 CPU. Specifying the CPU model lets us catch some miscompilation errors: for example, running a program compiled for the Cortex-M4F, which has a hardware FPU, will make QEMU error during its execution. -

-machine lm3s6965evb. This tells QEMU to emulate the LM3S6965EVB, an evaluation board that contains a LM3S6965 microcontroller. -

-nographic. This tells QEMU to not launch its GUI. -

-semihosting-config (..). This tells QEMU to enable semihosting. Semihosting lets the emulated device, among other things, use the host stdout, stderr and stdin and create files on the host. -

-kernel $file. This tells QEMU which binary to load and run on the emulated machine.

Typing out that long QEMU command is too much work! We can set a custom runner

to simplify the process. .cargo/config.toml has a commented out runner that invokes

QEMU; let's uncomment it:

head -n3 .cargo/config.toml

[target.thumbv7m-none-eabi]

# uncomment this to make `cargo run` execute programs on QEMU

runner = "qemu-system-arm -cpu cortex-m3 -machine lm3s6965evb -nographic -semihosting-config enable=on,target=native -kernel"

This runner only applies to the thumbv7m-none-eabi target, which is our

default compilation target. Now cargo run will compile the program and run it

on QEMU:

cargo run --example hello --release

Compiling app v0.1.0 (file:///tmp/app)

Finished release [optimized + debuginfo] target(s) in 0.26s

Running `qemu-system-arm -cpu cortex-m3 -machine lm3s6965evb -nographic -semihosting-config enable=on,target=native -kernel target/thumbv7m-none-eabi/release/examples/hello`

Hello, world!

Debugging

Debugging is critical to embedded development. Let's see how it's done.

Debugging an embedded device involves remote debugging as the program that we want to debug won't be running on the machine that's running the debugger program (GDB or LLDB).

Remote debugging involves a client and a server. In a QEMU setup, the client will be a GDB (or LLDB) process and the server will be the QEMU process that's also running the embedded program.

In this section we'll use the hello example we already compiled.

The first debugging step is to launch QEMU in debugging mode:

qemu-system-arm \

-cpu cortex-m3 \

-machine lm3s6965evb \

-nographic \

-semihosting-config enable=on,target=native \

-gdb tcp::3333 \

-S \

-kernel target/thumbv7m-none-eabi/debug/examples/hello

This command won't print anything to the console and will block the terminal. We have passed two extra flags this time:

-

-gdb tcp::3333. This tells QEMU to wait for a GDB connection on TCP port 3333. -

-S. This tells QEMU to freeze the machine at startup. Without this the program would have reached the end of main before we had a chance to launch the debugger!

Next we launch GDB in another terminal and tell it to load the debug symbols of the example:

gdb-multiarch -q target/thumbv7m-none-eabi/debug/examples/hello

NOTE: you might need another version of gdb instead of gdb-multiarch depending

on which one you installed in the installation chapter. This could also be

arm-none-eabi-gdb or just gdb.

Then within the GDB shell we connect to QEMU, which is waiting for a connection on TCP port 3333.

target remote :3333

Remote debugging using :3333

Reset () at $REGISTRY/cortex-m-rt-0.6.1/src/lib.rs:473

473 pub unsafe extern "C" fn Reset() -> ! {

You'll see that the process is halted and that the program counter is pointing

to a function named Reset. That is the reset handler: what Cortex-M cores

execute upon booting.

Note that on some setup, instead of displaying the line

Reset () at $REGISTRY/cortex-m-rt-0.6.1/src/lib.rs:473as shown above, gdb may print some warnings like :

core::num::bignum::Big32x40::mul_small () at src/libcore/num/bignum.rs:254src/libcore/num/bignum.rs: No such file or directory.That's a known glitch. You can safely ignore those warnings, you're most likely at Reset().

This reset handler will eventually call our main function. Let's skip all the

way there using a breakpoint and the continue command. To set the breakpoint, let's first take a look where we would like to break in our code, with the list command.

list main

This will show the source code, from the file examples/hello.rs.

6 use panic_halt as _;

7

8 use cortex_m_rt::entry;

9 use cortex_m_semihosting::{debug, hprintln};

10

11 #[entry]

12 fn main() -> ! {

13 hprintln!("Hello, world!").unwrap();

14

15 // exit QEMU

We would like to add a breakpoint just before the "Hello, world!", which is on line 13. We do that with the break command:

break 13

We can now instruct gdb to run up to our main function, with the continue command:

continue

Continuing.

Breakpoint 1, hello::__cortex_m_rt_main () at examples\hello.rs:13

13 hprintln!("Hello, world!").unwrap();

We are now close to the code that prints "Hello, world!". Let's move forward

using the next command.

next

16 debug::exit(debug::EXIT_SUCCESS);

At this point you should see "Hello, world!" printed on the terminal that's

running qemu-system-arm.

$ qemu-system-arm (..)

Hello, world!

Calling next again will terminate the QEMU process.

next

[Inferior 1 (Remote target) exited normally]

You can now exit the GDB session.

quit

Hardware

By now you should be somewhat familiar with the tooling and the development process. In this section we'll switch to real hardware; the process will remain largely the same. Let's dive in.

Know your hardware

Before we begin you need to identify some characteristics of the target device as these will be used to configure the project:

-

The ARM core. e.g. Cortex-M3.

-

Does the ARM core include an FPU? Cortex-M4F and Cortex-M7F cores do.

-

How much Flash memory and RAM does the target device have? e.g. 256 KiB of Flash and 32 KiB of RAM.

-

Where are Flash memory and RAM mapped in the address space? e.g. RAM is commonly located at address

0x2000_0000.

You can find this information in the data sheet or the reference manual of your device.

In this section we'll be using our reference hardware, the STM32F3DISCOVERY. This board contains an STM32F303VCT6 microcontroller. This microcontroller has:

-

A Cortex-M4F core that includes a single precision FPU

-

256 KiB of Flash located at address 0x0800_0000.

-

40 KiB of RAM located at address 0x2000_0000. (There's another RAM region but for simplicity we'll ignore it).

Configuring

We'll start from scratch with a fresh template instance. Refer to the

previous section on QEMU for a refresher on how to do this without

cargo-generate.

$ cargo generate --git https://github.com/rust-embedded/cortex-m-quickstart

Project Name: app

Creating project called `app`...

Done! New project created /tmp/app

$ cd app

Step number one is to set a default compilation target in .cargo/config.toml.

tail -n5 .cargo/config.toml

# Pick ONE of these compilation targets

# target = "thumbv6m-none-eabi" # Cortex-M0 and Cortex-M0+

# target = "thumbv7m-none-eabi" # Cortex-M3

# target = "thumbv7em-none-eabi" # Cortex-M4 and Cortex-M7 (no FPU)

target = "thumbv7em-none-eabihf" # Cortex-M4F and Cortex-M7F (with FPU)

We'll use thumbv7em-none-eabihf as that covers the Cortex-M4F core.

NOTE: As you may remember from the previous chapter, we have to install all targets and this is a new one. So don't forget to run the installation process

rustup target add thumbv7em-none-eabihffor this target.

The second step is to enter the memory region information into the memory.x

file.

$ cat memory.x

/* Linker script for the STM32F303VCT6 */

MEMORY

{

/* NOTE 1 K = 1 KiBi = 1024 bytes */

FLASH : ORIGIN = 0x08000000, LENGTH = 256K

RAM : ORIGIN = 0x20000000, LENGTH = 40K

}

NOTE: If you for some reason changed the

memory.xfile after you had made the first build of a specific build target, then docargo cleanbeforecargo build, becausecargo buildmay not track updates ofmemory.x.

We'll start with the hello example again, but first we have to make a small change.

In examples/hello.rs, make sure the debug::exit() call is commented out or

removed. It is used only for running in QEMU.

#[entry]

fn main() -> ! {

hprintln!("Hello, world!").unwrap();

// exit QEMU

// NOTE do not run this on hardware; it can corrupt OpenOCD state

// debug::exit(debug::EXIT_SUCCESS);

loop {}

}You can now cross compile programs using cargo build

and inspect the binaries using cargo-binutils as you did before. The

cortex-m-rt crate handles all the magic required to get your chip running,

as helpfully, pretty much all Cortex-M CPUs boot in the same fashion.

cargo build --example hello

Debugging

Debugging will look a bit different. In fact, the first steps can look different depending on the target device. In this section we'll show the steps required to debug a program running on the STM32F3DISCOVERY. This is meant to serve as a reference; for device specific information about debugging check out the Debugonomicon.

As before we'll do remote debugging and the client will be a GDB process. This time, however, the server will be OpenOCD.

As done during the verify section connect the discovery board to your laptop / PC and check that the ST-LINK header is populated.

On a terminal run openocd to connect to the ST-LINK on the discovery board.

Run this command from the root of the template; openocd will pick up the

openocd.cfg file which indicates which interface file and target file to use.

cat openocd.cfg

# Sample OpenOCD configuration for the STM32F3DISCOVERY development board

# Depending on the hardware revision you got you'll have to pick ONE of these

# interfaces. At any time only one interface should be commented out.

# Revision C (newer revision)

source [find interface/stlink.cfg]

# Revision A and B (older revisions)

# source [find interface/stlink-v2.cfg]

source [find target/stm32f3x.cfg]

NOTE If you found out that you have an older revision of the discovery board during the verify section then you should modify the

openocd.cfgfile at this point to useinterface/stlink-v2.cfg.

$ openocd

Open On-Chip Debugger 0.10.0

Licensed under GNU GPL v2

For bug reports, read

http://openocd.org/doc/doxygen/bugs.html

Info : auto-selecting first available session transport "hla_swd". To override use 'transport select <transport>'.

adapter speed: 1000 kHz

adapter_nsrst_delay: 100

Info : The selected transport took over low-level target control. The results might differ compared to plain JTAG/SWD

none separate

Info : Unable to match requested speed 1000 kHz, using 950 kHz

Info : Unable to match requested speed 1000 kHz, using 950 kHz

Info : clock speed 950 kHz

Info : STLINK v2 JTAG v27 API v2 SWIM v15 VID 0x0483 PID 0x374B

Info : using stlink api v2

Info : Target voltage: 2.913879

Info : stm32f3x.cpu: hardware has 6 breakpoints, 4 watchpoints

On another terminal run GDB, also from the root of the template.

gdb-multiarch -q target/thumbv7em-none-eabihf/debug/examples/hello

NOTE: like before you might need another version of gdb instead of gdb-multiarch depending

on which one you installed in the installation chapter. This could also be

arm-none-eabi-gdb or just gdb.

Next connect GDB to OpenOCD, which is waiting for a TCP connection on port 3333.

(gdb) target remote :3333

Remote debugging using :3333

0x00000000 in ?? ()

Now proceed to flash (load) the program onto the microcontroller using the

load command.

(gdb) load

Loading section .vector_table, size 0x400 lma 0x8000000

Loading section .text, size 0x1518 lma 0x8000400

Loading section .rodata, size 0x414 lma 0x8001918

Start address 0x08000400, load size 7468

Transfer rate: 13 KB/sec, 2489 bytes/write.

The program is now loaded. This program uses semihosting so before we do any

semihosting call we have to tell OpenOCD to enable semihosting. You can send

commands to OpenOCD using the monitor command.

(gdb) monitor arm semihosting enable

semihosting is enabled

You can see all the OpenOCD commands by invoking the

monitor helpcommand.

Like before we can skip all the way to main using a breakpoint and the

continue command.

(gdb) break main

Breakpoint 1 at 0x8000490: file examples/hello.rs, line 11.

Note: automatically using hardware breakpoints for read-only addresses.

(gdb) continue

Continuing.

Breakpoint 1, hello::__cortex_m_rt_main_trampoline () at examples/hello.rs:11

11 #[entry]

NOTE If GDB blocks the terminal instead of hitting the breakpoint after you issue the

continuecommand above, you might want to double check that the memory region information in thememory.xfile is correctly set up for your device (both the starts and lengths).

Step into the main function with step.

(gdb) step

halted: PC: 0x08000496

hello::__cortex_m_rt_main () at examples/hello.rs:13

13 hprintln!("Hello, world!").unwrap();

After advancing the program with next you should see "Hello, world!" printed on the OpenOCD console,

among other stuff.

$ openocd

(..)

Info : halted: PC: 0x08000502

Hello, world!

Info : halted: PC: 0x080004ac

Info : halted: PC: 0x080004ae

Info : halted: PC: 0x080004b0

Info : halted: PC: 0x080004b4

Info : halted: PC: 0x080004b8

Info : halted: PC: 0x080004bc

The message is only displayed once as the program is about to enter the infinite loop defined in line 19: loop {}

You can now exit GDB using the quit command.

(gdb) quit

A debugging session is active.

Inferior 1 [Remote target] will be detached.

Quit anyway? (y or n)

Debugging now requires a few more steps so we have packed all those steps into a

single GDB script named openocd.gdb. The file was created during the cargo generate step, and should work without any modifications. Let's have a peek:

cat openocd.gdb

target extended-remote :3333

# print demangled symbols

set print asm-demangle on

# detect unhandled exceptions, hard faults and panics

break DefaultHandler

break HardFault

break rust_begin_unwind

monitor arm semihosting enable

load

# start the process but immediately halt the processor

stepi

Now running <gdb> -x openocd.gdb target/thumbv7em-none-eabihf/debug/examples/hello will immediately connect GDB to

OpenOCD, enable semihosting, load the program and start the process.

Alternatively, you can turn <gdb> -x openocd.gdb into a custom runner to make

cargo run build a program and start a GDB session. This runner is included

in .cargo/config.toml but it's commented out.

head -n10 .cargo/config.toml

[target.thumbv7m-none-eabi]

# uncomment this to make `cargo run` execute programs on QEMU

# runner = "qemu-system-arm -cpu cortex-m3 -machine lm3s6965evb -nographic -semihosting-config enable=on,target=native -kernel"

[target.'cfg(all(target_arch = "arm", target_os = "none"))']

# uncomment ONE of these three option to make `cargo run` start a GDB session

# which option to pick depends on your system

runner = "arm-none-eabi-gdb -x openocd.gdb"

# runner = "gdb-multiarch -x openocd.gdb"

# runner = "gdb -x openocd.gdb"

$ cargo run --example hello

(..)

Loading section .vector_table, size 0x400 lma 0x8000000

Loading section .text, size 0x1e70 lma 0x8000400

Loading section .rodata, size 0x61c lma 0x8002270

Start address 0x800144e, load size 10380

Transfer rate: 17 KB/sec, 3460 bytes/write.

(gdb)

Memory Mapped Registers

Embedded systems can only get so far by executing normal Rust code and moving data around in RAM. If we want to get any information into or out of our system (be that blinking an LED, detecting a button press or communicating with an off-chip peripheral on some sort of bus) we're going to have to dip into the world of Peripherals and their 'memory mapped registers'.

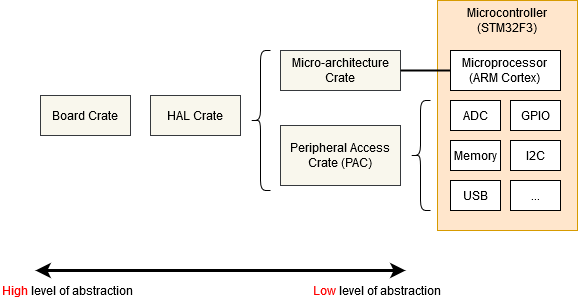

You may well find that the code you need to access the peripherals in your micro-controller has already been written, at one of the following levels:

- Micro-architecture Crate - This sort of crate handles any useful routines common to the processor core your microcontroller is using, as well as any peripherals that are common to all micro-controllers that use that particular type of processor core. For example the cortex-m crate gives you functions to enable and disable interrupts, which are the same for all Cortex-M based micro-controllers. It also gives you access to the 'SysTick' peripheral included with all Cortex-M based micro-controllers.

- Peripheral Access Crate (PAC) - This sort of crate is a thin wrapper over the various memory-wrapper registers defined for your particular part-number of micro-controller you are using. For example, tm4c123x for the Texas Instruments Tiva-C TM4C123 series, or stm32f30x for the ST-Micro STM32F30x series. Here, you'll be interacting with the registers directly, following each peripheral's operating instructions given in your micro-controller's Technical Reference Manual.

- HAL Crate - These crates offer a more user-friendly API for your particular processor, often by implementing some common traits defined in embedded-hal. For example, this crate might offer a

Serialstruct, with a constructor that takes an appropriate set of GPIO pins and a baud rate, and offers some sort ofwrite_bytefunction for sending data. See the chapter on Portability for more information on embedded-hal. - Board Crate - These crates go one step further than a HAL Crate by pre-configuring various peripherals and GPIO pins to suit the specific developer kit or board you are using, such as stm32f3-discovery for the STM32F3DISCOVERY board.

Board Crate

A board crate is the perfect starting point, if you're new to embedded Rust. They nicely abstract the HW details that might be overwhelming when starting studying this subject, and makes standard tasks easy, like turning a LED on or off. The functionality it exposes varies a lot between boards. Since this book aims at staying hardware agnostic, the board crates won't be covered by this book.

If you want to experiment with the STM32F3DISCOVERY board, it is highly recommended to take a look at the stm32f3-discovery board crate, which provides functionality to blink the board LEDs, access its compass, bluetooth and more. The Discovery book offers a great introduction to the use of a board crate.

But if you're working on a system that doesn't yet have dedicated board crate, or you need functionality not provided by existing crates, read on as we start from the bottom, with the micro-architecture crates.

Micro-architecture crate

Let's look at the SysTick peripheral that's common to all Cortex-M based micro-controllers. We can find a pretty low-level API in the cortex-m crate, and we can use it like this:

#![no_std]

#![no_main]

use cortex_m::peripheral::{syst, Peripherals};

use cortex_m_rt::entry;

use panic_halt as _;

#[entry]

fn main() -> ! {

let peripherals = Peripherals::take().unwrap();

let mut systick = peripherals.SYST;

systick.set_clock_source(syst::SystClkSource::Core);

systick.set_reload(1_000);

systick.clear_current();

systick.enable_counter();

while !systick.has_wrapped() {

// Loop

}

loop {}

}The functions on the SYST struct map pretty closely to the functionality defined by the ARM Technical Reference Manual for this peripheral. There's nothing in this API about 'delaying for X milliseconds' - we have to crudely implement that ourselves using a while loop. Note that we can't access our SYST struct until we have called Peripherals::take() - this is a special routine that guarantees that there is only one SYST structure in our entire program. For more on that, see the Peripherals section.

Using a Peripheral Access Crate (PAC)

We won't get very far with our embedded software development if we restrict ourselves to only the basic peripherals included with every Cortex-M. At some point, we're going to need to write some code that's specific to the particular micro-controller we're using. In this example, let's assume we have an Texas Instruments TM4C123 - a middling 80MHz Cortex-M4 with 256 KiB of Flash. We're going to pull in the tm4c123x crate to make use of this chip.

#![no_std]

#![no_main]

use panic_halt as _; // panic handler

use cortex_m_rt::entry;

use tm4c123x;

#[entry]

pub fn init() -> (Delay, Leds) {

let cp = cortex_m::Peripherals::take().unwrap();

let p = tm4c123x::Peripherals::take().unwrap();

let pwm = p.PWM0;

pwm.ctl.write(|w| w.globalsync0().clear_bit());

// Mode = 1 => Count up/down mode

pwm._2_ctl.write(|w| w.enable().set_bit().mode().set_bit());

pwm._2_gena.write(|w| w.actcmpau().zero().actcmpad().one());

// 528 cycles (264 up and down) = 4 loops per video line (2112 cycles)

pwm._2_load.write(|w| unsafe { w.load().bits(263) });

pwm._2_cmpa.write(|w| unsafe { w.compa().bits(64) });

pwm.enable.write(|w| w.pwm4en().set_bit());

}

We've accessed the PWM0 peripheral in exactly the same way as we accessed the SYST peripheral earlier, except we called tm4c123x::Peripherals::take(). As this crate was auto-generated using svd2rust, the access functions for our register fields take a closure, rather than a numeric argument. While this looks like a lot of code, the Rust compiler can use it to perform a bunch of checks for us, but then generate machine-code which is pretty close to hand-written assembler! Where the auto-generated code isn't able to determine that all possible arguments to a particular accessor function are valid (for example, if the SVD defines the register as 32-bit but doesn't say if some of those 32-bit values have a special meaning), then the function is marked as unsafe. We can see this in the example above when setting the load and compa sub-fields using the bits() function.

Reading

The read() function returns an object which gives read-only access to the various sub-fields within this register, as defined by the manufacturer's SVD file for this chip. You can find all the functions available on special R return type for this particular register, in this particular peripheral, on this particular chip, in the tm4c123x documentation.

if pwm.ctl.read().globalsync0().is_set() {

// Do a thing

}Writing

The write() function takes a closure with a single argument. Typically we call this w. This argument then gives read-write access to the various sub-fields within this register, as defined by the manufacturer's SVD file for this chip. Again, you can find all the functions available on the 'w' for this particular register, in this particular peripheral, on this particular chip, in the tm4c123x documentation. Note that all of the sub-fields that we do not set will be set to a default value for us - any existing content in the register will be lost.

pwm.ctl.write(|w| w.globalsync0().clear_bit());Modifying

If we wish to change only one particular sub-field in this register and leave the other sub-fields unchanged, we can use the modify function. This function takes a closure with two arguments - one for reading and one for writing. Typically we call these r and w respectively. The r argument can be used to inspect the current contents of the register, and the w argument can be used to modify the register contents.

pwm.ctl.modify(|r, w| w.globalsync0().clear_bit());The modify function really shows the power of closures here. In C, we'd have to read into some temporary value, modify the correct bits and then write the value back. This means there's considerable scope for error:

uint32_t temp = pwm0.ctl.read();

temp |= PWM0_CTL_GLOBALSYNC0;

pwm0.ctl.write(temp);

uint32_t temp2 = pwm0.enable.read();

temp2 |= PWM0_ENABLE_PWM4EN;

pwm0.enable.write(temp); // Uh oh! Wrong variable!

Using a HAL crate

The HAL crate for a chip typically works by implementing a custom Trait for the raw structures exposed by the PAC. Often this trait will define a function called constrain() for single peripherals or split() for things like GPIO ports with multiple pins. This function will consume the underlying raw peripheral structure and return a new object with a higher-level API. This API may also do things like have the Serial port new function require a borrow on some Clock structure, which can only be generated by calling the function which configures the PLLs and sets up all the clock frequencies. In this way, it is statically impossible to create a Serial port object without first having configured the clock rates, or for the Serial port object to misconvert the baud rate into clock ticks. Some crates even define special traits for the states each GPIO pin can be in, requiring the user to put a pin into the correct state (say, by selecting the appropriate Alternate Function Mode) before passing the pin into Peripheral. All with no run-time cost!

Let's see an example:

#![no_std]

#![no_main]

use panic_halt as _; // panic handler

use cortex_m_rt::entry;

use tm4c123x_hal as hal;

use tm4c123x_hal::prelude::*;

use tm4c123x_hal::serial::{NewlineMode, Serial};

use tm4c123x_hal::sysctl;

#[entry]

fn main() -> ! {

let p = hal::Peripherals::take().unwrap();

let cp = hal::CorePeripherals::take().unwrap();

// Wrap up the SYSCTL struct into an object with a higher-layer API

let mut sc = p.SYSCTL.constrain();

// Pick our oscillation settings

sc.clock_setup.oscillator = sysctl::Oscillator::Main(

sysctl::CrystalFrequency::_16mhz,

sysctl::SystemClock::UsePll(sysctl::PllOutputFrequency::_80_00mhz),

);

// Configure the PLL with those settings

let clocks = sc.clock_setup.freeze();

// Wrap up the GPIO_PORTA struct into an object with a higher-layer API.

// Note it needs to borrow `sc.power_control` so it can power up the GPIO

// peripheral automatically.

let mut porta = p.GPIO_PORTA.split(&sc.power_control);

// Activate the UART.

let uart = Serial::uart0(

p.UART0,

// The transmit pin

porta

.pa1

.into_af_push_pull::<hal::gpio::AF1>(&mut porta.control),

// The receive pin

porta

.pa0

.into_af_push_pull::<hal::gpio::AF1>(&mut porta.control),

// No RTS or CTS required

(),

(),

// The baud rate

115200_u32.bps(),

// Output handling

NewlineMode::SwapLFtoCRLF,

// We need the clock rates to calculate the baud rate divisors

&clocks,

// We need this to power up the UART peripheral

&sc.power_control,

);

loop {

writeln!(uart, "Hello, World!\r\n").unwrap();

}

}Semihosting

Semihosting is a mechanism that lets embedded devices do I/O on the host and is mainly used to log messages to the host console. Semihosting requires a debug session and pretty much nothing else (no extra wires!) so it's super convenient to use. The downside is that it's super slow: each write operation can take several milliseconds depending on the hardware debugger (e.g. ST-Link) you use.

The cortex-m-semihosting crate provides an API to do semihosting operations

on Cortex-M devices. The program below is the semihosting version of "Hello,

world!":

#![no_main]

#![no_std]

use panic_halt as _;

use cortex_m_rt::entry;

use cortex_m_semihosting::hprintln;

#[entry]

fn main() -> ! {

hprintln!("Hello, world!").unwrap();

loop {}

}If you run this program on hardware you'll see the "Hello, world!" message within the OpenOCD logs.

$ openocd

(..)

Hello, world!

(..)

You do need to enable semihosting in OpenOCD from GDB first:

(gdb) monitor arm semihosting enable

semihosting is enabled

QEMU understands semihosting operations so the above program will also work with

qemu-system-arm without having to start a debug session. Note that you'll

need to pass the -semihosting-config flag to QEMU to enable semihosting

support; these flags are already included in the .cargo/config.toml file of the

template.

$ # this program will block the terminal

$ cargo run

Running `qemu-system-arm (..)

Hello, world!

There's also an exit semihosting operation that can be used to terminate the

QEMU process. Important: do not use debug::exit on hardware; this function

can corrupt your OpenOCD session and you will not be able to debug more programs

until you restart it.

#![no_main]

#![no_std]

use panic_halt as _;

use cortex_m_rt::entry;

use cortex_m_semihosting::debug;

#[entry]

fn main() -> ! {

let roses = "blue";

if roses == "red" {

debug::exit(debug::EXIT_SUCCESS);

} else {

debug::exit(debug::EXIT_FAILURE);

}

loop {}

}$ cargo run

Running `qemu-system-arm (..)

$ echo $?

1

One last tip: you can set the panicking behavior to exit(EXIT_FAILURE). This

will let you write no_std run-pass tests that you can run on QEMU.

For convenience, the panic-semihosting crate has an "exit" feature that when

enabled invokes exit(EXIT_FAILURE) after logging the panic message to the host

stderr.

#![no_main]

#![no_std]

use panic_semihosting as _; // features = ["exit"]

use cortex_m_rt::entry;

use cortex_m_semihosting::debug;

#[entry]

fn main() -> ! {

let roses = "blue";

assert_eq!(roses, "red");

loop {}

}$ cargo run

Running `qemu-system-arm (..)

panicked at 'assertion failed: `(left == right)`

left: `"blue"`,

right: `"red"`', examples/hello.rs:15:5

$ echo $?

1

NOTE: To enable this feature on panic-semihosting, edit your

Cargo.toml dependencies section where panic-semihosting is specified with:

panic-semihosting = { version = "VERSION", features = ["exit"] }

where VERSION is the version desired. For more information on dependencies

features check the specifying dependencies section of the Cargo book.

Panicking

Panicking is a core part of the Rust language. Built-in operations like indexing are runtime checked for memory safety. When out of bounds indexing is attempted this results in a panic.

In the standard library panicking has a defined behavior: it unwinds the stack of the panicking thread, unless the user opted for aborting the program on panics.

In programs without standard library, however, the panicking behavior is left

undefined. A behavior can be chosen by declaring a #[panic_handler] function.

This function must appear exactly once in the dependency graph of a program,

and must have the following signature: fn(&PanicInfo) -> !, where PanicInfo

is a struct containing information about the location of the panic.

Given that embedded systems range from user facing to safety critical (cannot

crash) there's no one size fits all panicking behavior but there are plenty of

commonly used behaviors. These common behaviors have been packaged into crates

that define the #[panic_handler] function. Some examples include:

panic-abort. A panic causes the abort instruction to be executed.panic-halt. A panic causes the program, or the current thread, to halt by entering an infinite loop.panic-itm. The panicking message is logged using the ITM, an ARM Cortex-M specific peripheral.panic-semihosting. The panicking message is logged to the host using the semihosting technique.

You may be able to find even more crates searching for the panic-handler

keyword on crates.io.

A program can pick one of these behaviors simply by linking to the corresponding crate. The fact that the panicking behavior is expressed in the source of an application as a single line of code is not only useful as documentation but can also be used to change the panicking behavior according to the compilation profile. For example:

#![no_main]

#![no_std]

// dev profile: easier to debug panics; can put a breakpoint on `rust_begin_unwind`

#[cfg(debug_assertions)]

use panic_halt as _;

// release profile: minimize the binary size of the application

#[cfg(not(debug_assertions))]

use panic_abort as _;

// ..In this example the crate links to the panic-halt crate when built with the

dev profile (cargo build), but links to the panic-abort crate when built

with the release profile (cargo build --release).

The

use panic_abort as _;form of theusestatement is used to ensure thepanic_abortpanic handler is included in our final executable while making it clear to the compiler that we won't explicitly use anything from the crate. Without theas _rename, the compiler would warn that we have an unused import. Sometimes you might seeextern crate panic_abortinstead, which is an older style used before the 2018 edition of Rust, and should now only be used for "sysroot" crates (those distributed with Rust itself) such asproc_macro,alloc,std, andtest.

An example

Here's an example that tries to index an array beyond its length. The operation results in a panic.

#![no_main]

#![no_std]

use panic_semihosting as _;

use cortex_m_rt::entry;

#[entry]

fn main() -> ! {

let xs = [0, 1, 2];

let i = xs.len();

let _y = xs[i]; // out of bounds access

loop {}

}This example chose the panic-semihosting behavior which prints the panic

message to the host console using semihosting.

$ cargo run

Running `qemu-system-arm -cpu cortex-m3 -machine lm3s6965evb (..)

panicked at 'index out of bounds: the len is 3 but the index is 4', src/main.rs:12:13

You can try changing the behavior to panic-halt and confirm that no message is

printed in that case.

Exceptions

Exceptions, and interrupts, are a hardware mechanism by which the processor handles asynchronous events and fatal errors (e.g. executing an invalid instruction). Exceptions imply preemption and involve exception handlers, subroutines executed in response to the signal that triggered the event.

The cortex-m-rt crate provides an exception attribute to declare exception

handlers.

// Exception handler for the SysTick (System Timer) exception

#[exception]

fn SysTick() {

// ..

}Other than the exception attribute exception handlers look like plain

functions but there's one more difference: exception handlers can not be

called by software. Following the previous example, the statement SysTick();

would result in a compilation error.

This behavior is pretty much intended and it's required to provide a feature:

static mut variables declared inside exception handlers are safe to use.

#[exception]

fn SysTick() {

static mut COUNT: u32 = 0;

// `COUNT` has transformed to type `&mut u32` and it's safe to use

*COUNT += 1;

}As you may know, using static mut variables in a function makes it

non-reentrant. It's undefined behavior to call a non-reentrant function,

directly or indirectly, from more than one exception / interrupt handler or from

main and one or more exception / interrupt handlers.

Safe Rust must never result in undefined behavior so non-reentrant functions

must be marked as unsafe. Yet I just told that exception handlers can safely

use static mut variables. How is this possible? This is possible because

exception handlers can not be called by software thus reentrancy is not

possible. These handlers are called by the hardware itself which is assumed to be physically non-concurrent.

As a result, in the context of exception handlers in embedded systems, the absence of concurrent invocations of the same handler ensures that there are no reentrancy issues, even if the handler uses static mutable variables.

In a multicore system, where multiple processor cores are executing code concurrently, the potential for reentrancy issues becomes relevant again, even within exception handlers. While each core may have its own set of exception handlers, there can still be scenarios where multiple cores attempt to execute the same exception handler simultaneously.

To address this concern in a multicore environment, proper synchronization mechanisms need to be employed within the exception handlers to ensure that access to shared resources is properly coordinated among the cores. This typically involves the use of techniques such as locks, semaphores, or atomic operations to prevent data races and maintain data integrity

Note that the

exceptionattribute transforms definitions of static variables inside the function by wrapping them intounsafeblocks and providing us with new appropriate variables of type&mutof the same name. Thus we can dereference the reference via*to access the values of the variables without needing to wrap them in anunsafeblock.

A complete example

Here's an example that uses the system timer to raise a SysTick exception

roughly every second. The SysTick exception handler keeps track of how many

times it has been called in the COUNT variable and then prints the value of

COUNT to the host console using semihosting.

NOTE: You can run this example on any Cortex-M device; you can also run it on QEMU

#![deny(unsafe_code)]

#![no_main]

#![no_std]

use panic_halt as _;

use core::fmt::Write;

use cortex_m::peripheral::syst::SystClkSource;

use cortex_m_rt::{entry, exception};

use cortex_m_semihosting::{

debug,

hio::{self, HostStream},

};

#[entry]

fn main() -> ! {

let p = cortex_m::Peripherals::take().unwrap();

let mut syst = p.SYST;

// configures the system timer to trigger a SysTick exception every second

syst.set_clock_source(SystClkSource::Core);

// this is configured for the LM3S6965 which has a default CPU clock of 12 MHz

syst.set_reload(12_000_000);

syst.clear_current();

syst.enable_counter();

syst.enable_interrupt();

loop {}

}

#[exception]

fn SysTick() {

static mut COUNT: u32 = 0;

static mut STDOUT: Option<HostStream> = None;

*COUNT += 1;

// Lazy initialization

if STDOUT.is_none() {

*STDOUT = hio::hstdout().ok();

}

if let Some(hstdout) = STDOUT.as_mut() {

write!(hstdout, "{}", *COUNT).ok();

}

// IMPORTANT omit this `if` block if running on real hardware or your

// debugger will end in an inconsistent state

if *COUNT == 9 {

// This will terminate the QEMU process

debug::exit(debug::EXIT_SUCCESS);

}

}tail -n5 Cargo.toml

[dependencies]

cortex-m = "0.5.7"

cortex-m-rt = "0.6.3"

panic-halt = "0.2.0"

cortex-m-semihosting = "0.3.1"

$ cargo run --release

Running `qemu-system-arm -cpu cortex-m3 -machine lm3s6965evb (..)

123456789